What The Experts Say

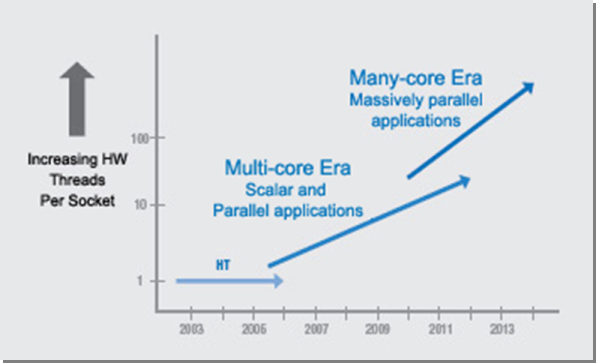

Projected Rise in Core Counts

Will parallel Chips Pay Off? - The Economist, March 2003

“The key to success in parallel processing on a chip will be the ability to map computational algorithms efficiently on to an array of resources, and hide the complexity from both the programmer and user. The company that can do that has a shot at being the next Intel.” -

HPCwire Interviews with Justin Rattner - Intel CTO, Sept 2005.

“It is generally accepted that parallel programming is non trivial. The current programming techniques are subject to all sorts of errors that are particularly difficult to track down, mostly time dependent errors.” “One of the big challenges will be coming up with new approaches that eliminate very large classes of these time dependent errors and present the programmer with a much simplified view of how the system behaves.” “To get the full benefit we will probably invent new languages. That doesn't happen often, but it does every 10 years.” “One of the problems of the traditional approach to programming is that parallel processors don't compose very well. If you try to build modular parallel software, you are really in trouble. "I can't give you a parallel library and say: 'You can use this, you don't have to know anything about what is going on. You can't build modular parallel programs.’” "A few years ago, when Intel asked me to look at what we should be doing in HPC, I was struck by how little progress had been made on the programming front," says Rattner. That's my big disappointment. The technologies that were popular a decade or more ago are still in widespread use today. We're still programming in MPI and still working on technologies like OpenMP. I had hoped and expected that after a decade or more we really would have made some fundamental advancements on the software side." "2006 is one of the most significant years in our history," declares Rattner. "Not since the introduction of the Pentium Pro -- and some would argue, not since the introduction of the Pentium -- have we really made such a profound change in the microarchitecture. And that change is not limited to one market segment. This is really huge!”

A Fundamental Turn Toward Concurrency in Software - Herb Sutter in Dr Dobb’s Journal, Feb 2005

“Concurrency is the next major revolution in how we write software. Different experts still have different opinions on whether it will be bigger than OO, but that kind of conversation is best left to pundits. For technologists, the interesting thing is that concurrency is of the same order as OOP both in the (expected) scale of the revolution and in the complexity and learning curve of the technology.” “Probably the greatest cost of concurrency is that concurrency really is hard: The programming model, meaning the model in programmers' heads that they need to reason reliably about their program, is much harder than it is for sequential control flow.” “Everybody who learns concurrency thinks he understands it, but ends up finding mysterious races he thought weren't possible and discovers that he didn't actually understand it after all.” “Not only are today’s languages and tools inadequate to transform applications into parallel programs, but also it is difficult to find parallelism in mainstream applications, and—worst of all—concurrency requires programmers to think in a way humans find difficult.”,

The Problem With Threads - Lee, E.A., IEEE Computer, vol. 36, no. 5, pp. 33-42, May 2006

"Coordination languages do introduce new syntax, but that syntax serves purposes that are orthogonal to those of established programming languages. Whereas a general-purpose concurrent language like Erlang or Ada has to include syntax for mundane operations such as arithmetic expressions, a coordination language need not specify anything more than coordination. Given this, the syntax can be noticeably distinct." “For concurrent programming to become mainstream, we must discard threads as a programming model. Nondeterminism should be judiciously and carefully introduced where needed, and it should be explicit in programs.” “Threads, as a model of computation, are wildly nondeterministic, and the job of the programmer becomes one of pruning that nondeterminism. Although many research techniques improve the model by offering more effective pruning, I argue that this is approaching the problem backwards. Rather than pruning nondeterminism, we should build from essentially deterministic, composable components. Nondeterminism should be explicitly and judiciously introduced where needed, rather than removed where not needed. The consequences of this principle are profound. I argue for the development of concurrent coordination languages based on sound, composable formalisms. I believe that such languages will yield much more reliable, and more concurrent programs.” “Although threads seem to be a small step from sequential computation, in fact, they represent a huge step. They discard the most essential and appealing properties of sequential computation: understandability, predictability, and determinism.”

Examining uC++ - Peter A. Buhr and Richard C. Bilson in Dr Dobb’s Journal, Dec 2005

“Concurrency is the most complex form of programming, with certain kinds of real-time programming being the most complex forms of concurrency.”

Software and the Concurrency Revolution - Herb Sutter and James Larus, Microsoft in ACM Queue vol. 3, no. 7, Sept 2005

“But concurrency is hard. Not only are today’s languages and tools inadequate to transform applications into parallel programs, but also it is difficult to find parallelism in mainstream applications, and—worst of all—concurrency requires programmers to think in a way humans find difficult.” “Finally, humans are quickly overwhelmed by concurrency and find it much more difficult to reason about concurrent than sequential code. Even careful people miss possible interleavings among simple collections of partially ordered operations.”

The Trouble with Locks - Herb Sutter in Dr Dobb’s Journal, Feb 2005

“This demonstrates the fundamental issue that lock-based synchronization isn't composable, meaning that you can't take two concurrency-correct lock-based pieces of code and glue them together into a bigger concurrency-correct piece of code. Building a large system using lock-based programming is like building a house using bricks that are made out of different kinds of volatile materials that are stable in isolation but not in all combinations, so that they often work well and stack nicely but occasionally they cause violent explosions when the wrong two bricks happen to touch.” “Finally, programming languages and systems will increasingly be forced to deal well with concurrency." Before concurrency becomes accessible for routine use by mainstream developers, we will need significantly better, still-to-be-designed, higher level language abstractions for concurrency than are available in any language on any platform. Lock-based programming is our status quo and it isn't enough; it is known to be not composable, hard to use, and full of latent races (where you forgot to lock) and deadlocks (where you lock the wrong way). Comparing the concurrency world to the programming language world, basics such as semaphores have been around almost as long as assembler and are at the same level as assembler; locks are like structured programming with goto; and we need an "OO" level of abstraction for concurrency.”

The Concurrency Revolution - Herb Sutter in Dr Dobb’s Journal, Jan 2005

“Finally, programming languages and systems will increasingly be forced to deal well with concurrency. Java has included support for concurrency since its beginning, although mistakes were made that later had to be corrected over several releases in order to do concurrent programming more correctly and efficiently. C++ has long been used to write heavy-duty multithreaded systems well, but it has no standardized support for concurrency at all (the ISO C++ Standard doesn't even mention threads, and does so intentionally), and so typically, the concurrency is of necessity, accomplished by using nonportable, platform-specific concurrency features and libraries. (It's also often incomplete; for example, static variables must be initialized only once, which typically requires that the compiler wrap them with a lock, but many C++ implementations do not generate the lock.)” |